CareerFoundry

Last updated: October 18, 2021.

Context

While CareerFoundry isn't my top priority on any given month - my full-time work is - I do care a lot about it.

CareerFoundry offers a self-paced UX design course that is designed to take an active learner 6-10 months to complete. The students choose unique products to design and I enjoy the challenge of providing the breadth of UX expertise they collectively demand of me.

What Mentorship Entails

Here are a couple noteworthy points about mentoring the students:

- Mentorship Calls: throughout the course, my students are able to schedule hour-long calls with me at any available time on my calendar

- Portfolio Reviews: each of the seven sections ends with me giving them a review of the designer's portfolio work

Mentorship Calls

The variable factor of a student's course is how many calls they book with their mentor. The best way I can help my students is to make my calls as valuable as possible and to encourage them to book them often. Here are snapshots of some of my mentor calls:

Portfolio Reviews

Summary of My Mentorship

From September, 2020 to September, 2021 in my off hours I logged over 400 hours of design mentorship.

Desired Outcomes As a UX Mentor

Over my time as a mentor, I want my students to achieve continually:

- Higher course completion rates

- Shorter times until their first job offer

- Higher hiring rates

- Higher starting salaries

My Process

For the first eight months I just treated my design mentorship like an off-hours side gig, doing nothing more than gradual improvements to quality of my calls with my students. My process has completely improved since July, 2021, and I have a running article series called Applying Statistical Process Control to My UX Mentorship. See a breakdown of the data:

- Part 1

- Part 2 (Coming Soon)

On a monthly basis, I have my CareerFoundry invoice items transcribed into a spreadsheet where I can extrapolate data for student progress and monitor the impact of improvements I make to my mentorship system. My process is yielding ever more impactful results as I optimize my process.

My Mentorship Calls

Here are the calls I recommend to all my students.

I keep track of which calls each of my students have had.

Copy Work Done On Calls With Students

Here is one example of an effective exercise that designers advocate for improving design skills - mimicing the masters to understand their technique. It's an exercise I help my students get accustomed to.

Duolingo

| Original | Copy |

|---|---|

|  |

My Mentorship Results Thus Far

I won't have good data for student hiring metrics until the beginning of 2022, because CF grads typically take up to 6 months to get hired in a UX-related role. Even when I do start getting good data on this, those statistics will be based on my mentorship process from 2020. It will take at least a year to see the results of my mentorship process today.

I can prove, however, that my process is yielding ever more impactful results. See a breakdown of the data in my ongoing article series, Applying Statistical Process Control to My UX Mentorship:

- Part 1

- Part 2 (Coming Soon)

Examples of Student Product Design Work

TATO (UX Process and Storytelling)

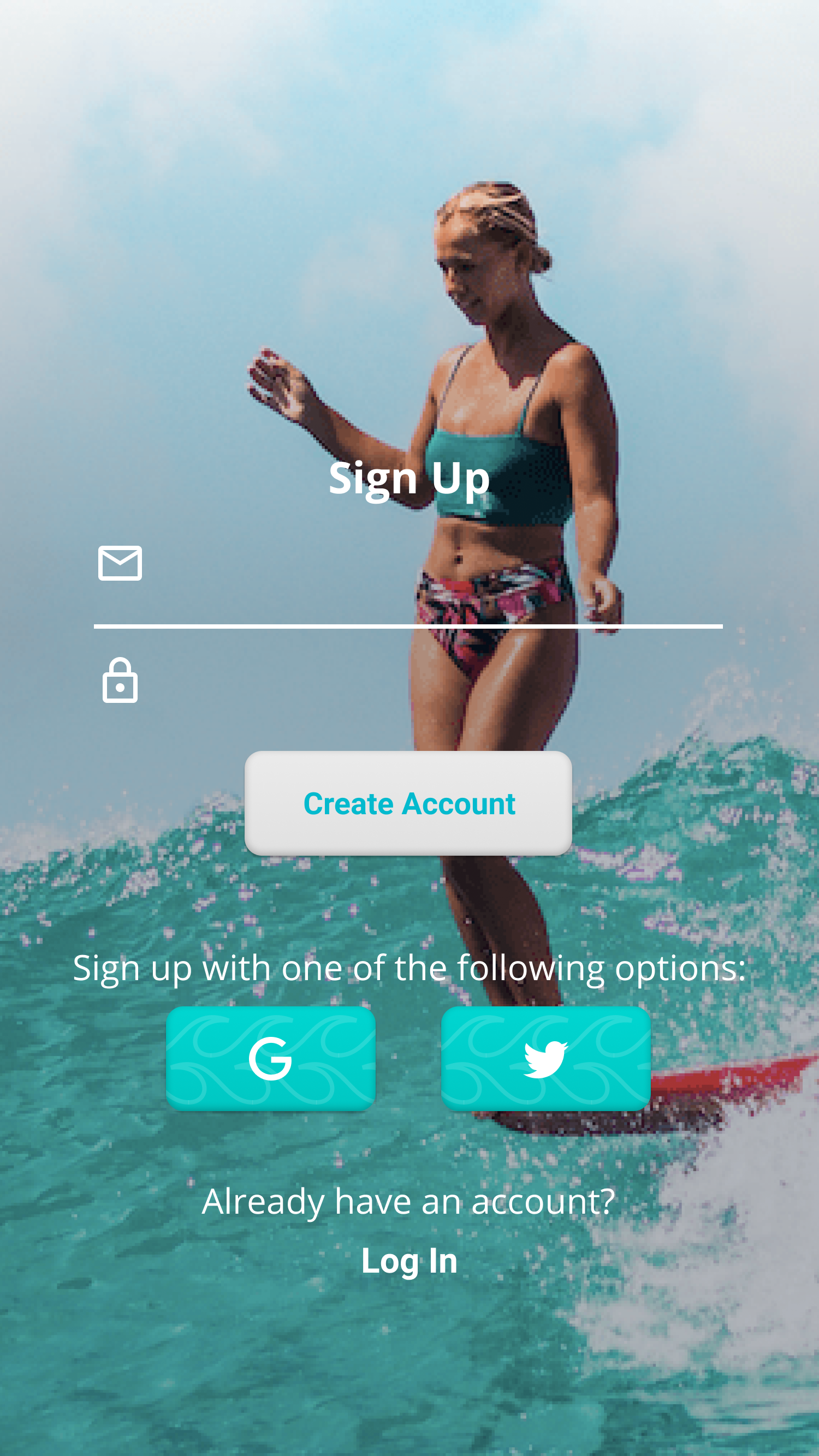

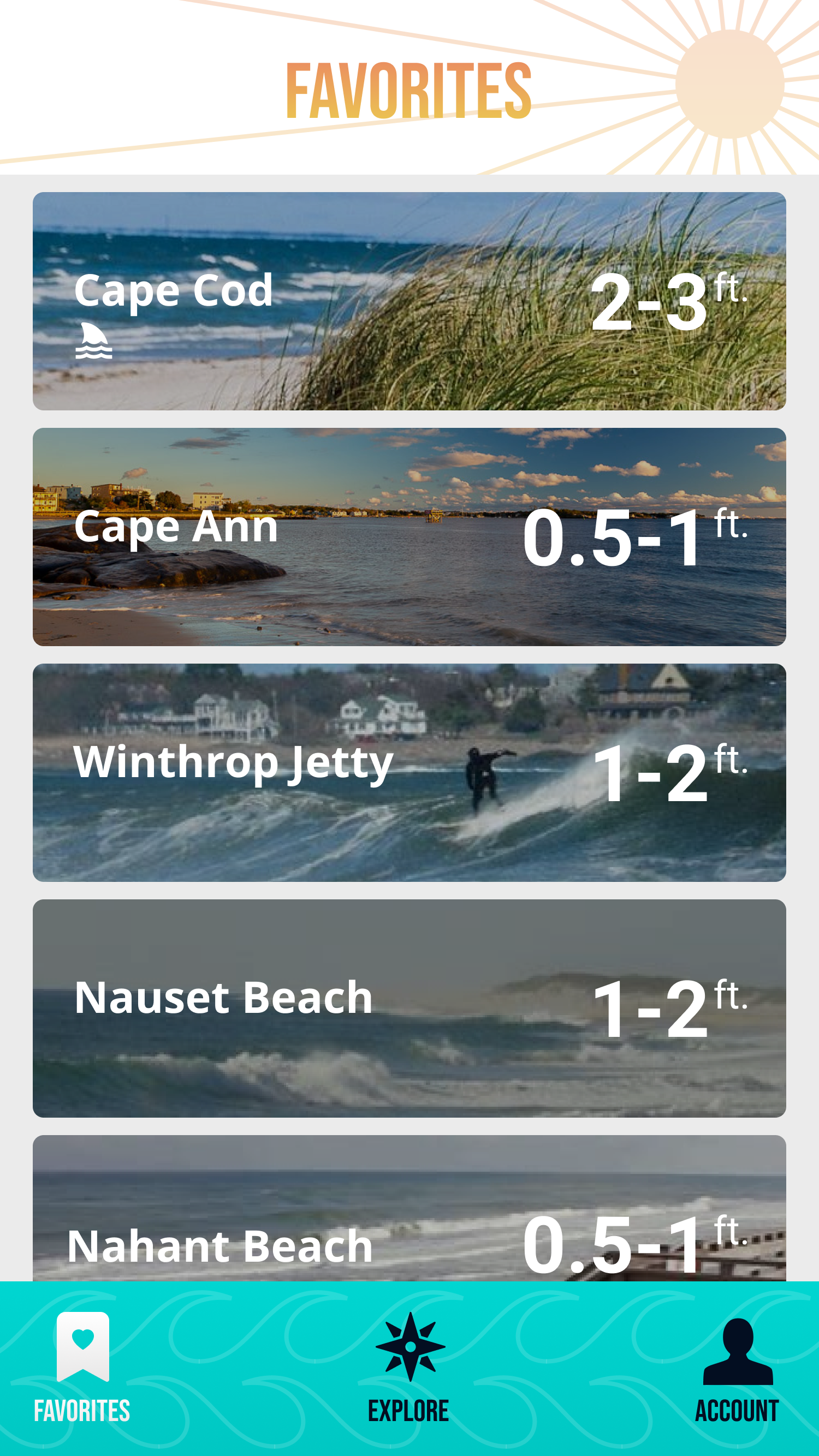

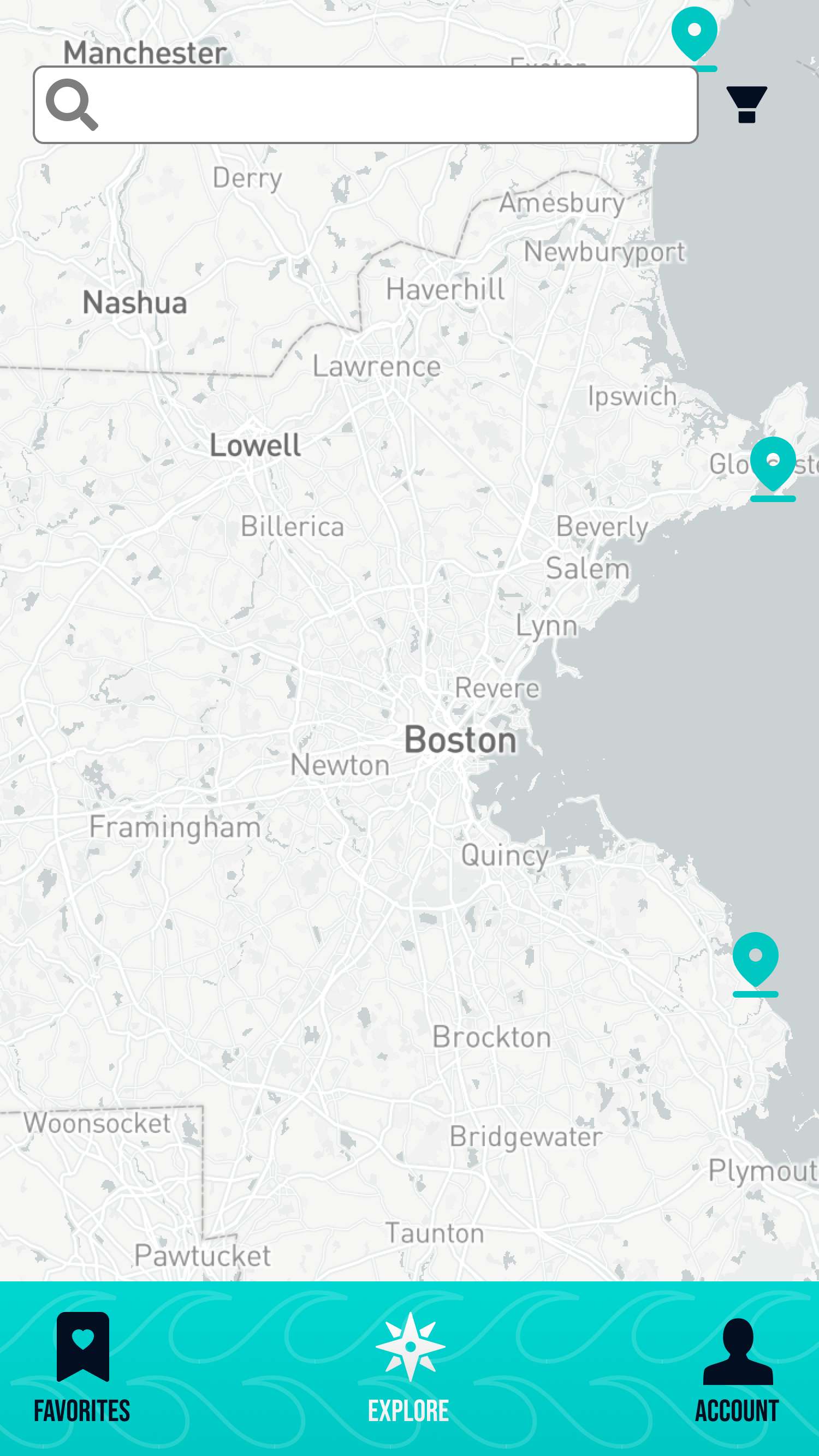

RADSURF (UI/ UX)

I was heavily involved with this one.

Click through the prototype. (The blue boxes that appear on a click are where you can navigate to new screens.)

Personal Reflection

I really enjoy mentorship.

I love the opportunity to hone my skills through teaching. I enjoy expanding my network with up-and-coming designers, many of whom will eventually amount to great ones. I love autonomous work. I am in my element innovating with new creations and I get an outlet for that helping my students on their portfolio pieces. None of this was particularly revelatory, though, so I'll expound on my other main takeaways.

I love applying statistical process control to my work.

This was the first time where I've taken a studied and disciplined approach to applying SPC principles I read about to a project. I've been amazed with the outcomes it facilitates. Wherever possible moving forward, for the projects I care about I want to track data and visualize it on a routinely-referenced dashboard.

I've come away from the insights gathered in part 1 of my SPC article series with a few key takeaways.

a. The Impact of a Dashboard

Having a dashboard give me the most actionable data (that I've figured out thus far) has done more to (1) inform me of where I can improve my process and (2) consider how I might improve my process... than a scrum master or manager could, I believe.

b. Data Analytics as a Key Companion Feedback Loop to User Research

As a long-time UX practitioner - mostly of early-stage products - I've associated improved outcomes more with a qualitative feedback loop between users and designers engaging with each other directly. And yet... most of the measurable improvements to my mentorship approach have been in response to quantitative metrics and have been invisible to the students. Data insights prompted actions that, for the most part, could not have been easily (or even possibly) detected through user research. Also, the optimizations I have made so far have for the most part flown under the students' radars. For example:

- I really doubt my women students have thought of themselves as less assertive and, yet, telling them to be more assertive in utilizing my available time did result in them doing so.

- My students could not have told me that when they book calls with me and what days they most often schedule with me the way the data does. Also, I'm sure they didn't notice when Monday availability dropped and Wednesday / Thursday availability increased on my schedule. Regardless, this resulted in their booking more calls with me.

- I'm sure that if I were to ask my students on which month they guess I had compiled a spreadsheet of all the data I have surrounding our mentorship engagement in order to track our progress, they would not know. And yet, it has had measurable improvements on my results and their course outcomes.

c. Translating Design to Business Outcomes

SPC is the best way I know (post launch) to define a system of continuous improvement and translate design into improved business outcomes. Thus far in my career, I've focused primarily on designing and launching new products. In that context, it's easy to say how design translates to business outcomes. I haven't had as good of an explanation to executives of mature businesses how design translates to more profitable businesses or why design should have a seat at the executive table. Now I can more easily associate metrics to product and service design work.